top of page

Online Grooming Prevention Chatbot

ShieldBot (Cyber Shield) is an educational web app that helps teens, caregivers, and educators recognize and respond to online grooming. Through realistic micro-scenarios — strangers from games, false casting managers, and social-media peers — users practice safe conversations in a guided chat interface. When risky language appears, the app shows an immediate warning explaining why the message is suspicious and offers a short, interactive quiz to reinforce key safety rules (privacy, no sharing of photos, avoiding secret requests, and trusted adults). A serverless backend simulates plausible replies while protecting privacy and preventing explicit content; the system also flags red-flag phrases to teach pattern recognition. Designed for schools, youth programs, and families, ShieldBot is lightweight, mobile-friendly, and deployable via static hosting with serverless functions. Its goal is simple: build awareness, boost confidence, and give users concrete responses to stay safe online.

Chatbot

Process

ShieldBot — Technical Process & Project Statement

ShieldBot is an educational web application that trains teens, caregivers, and educators to recognize and respond to online grooming. It uses scenario-driven chat simulations backed by a serverless AI pipeline and layered safety controls to provide realistic practice while avoiding harmful content.

My role & key contributions

I led the full product cycle from concept to deployment, combining UX design, prompt engineering, backend engineering, and safety engineering.

Responsibilities and outcomes included:

Product design & UX: Designed the micro-scenario flow, chat UI, modal warnings, and quiz interactions to maximize clarity and short-form learning.

AI integration: Engineered system prompts and API integration with the OpenAI Responses API to simulate plausible conversational replies while enforcing strict content rules. Safety systems: Built multi-layered guards (prompt constraints, regex-based red-flag detection, server-side fallbacks) to prevent explicit or exploitative content.

Deployment & operations: Implemented a serverless architecture on Netlify (Functions), configured environment secrets securely, and automated GitHub

Netlify CI/CD.

Testing & evaluation: Wrote unit tests for rule detectors, ran integration tests, and structured pilot usability tests with teachers/students.

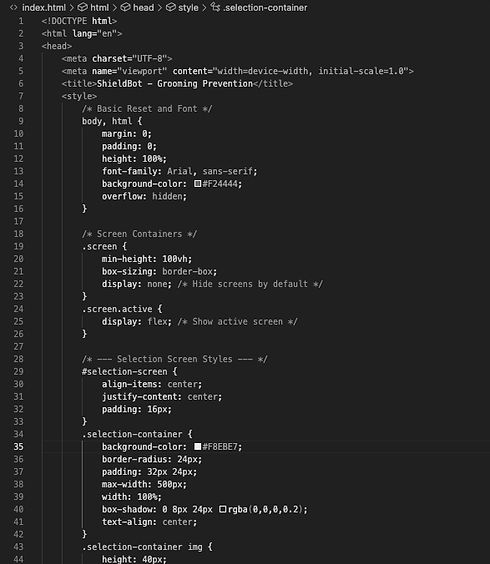

Architecture

Frontend: Static HTML/CSS/JavaScript single page — scenario selection, conversation UI, warning modal, quiz modal. Focused on accessibility and mobile responsiveness.

Backend: Netlify Functions (Node) providing /api/chat and diagnostic endpoints.

AI: OpenAI Responses API (e.g., gpt-4.1-mini) called from the serverless function.

Safety layers: (1) system prompt with hard rules, (2) regex-based red-flag detection, (3) server fallbacks when keys/requests fail, (4) environment-based controls for preview vs production.

Deployment: GitHub (source) → Netlify (automatic builds + serverless function bundling). Secrets stored in Netlify environment variables.

Technical Design - AI & Safety

Prompt engineering

I designed a compact system prompt that instructs the model to:

- Adopt the role of the “other person” (casting manager, gamer, peer).

- Use PG-13 language, respond in ≤30 words, and never ask for sexual, explicit, or identifying information from minors.

- Follow scenario hints to vary tone/lexicon per scenario while adhering to the safety constraints.

Runtime flow (serverless function)

Client posts { messages[], scenario } to /api/chat.

Server validates method and checks for OPENAI_API_KEY.

If key present: build input combining system prompt, scenario hint, and conversation history; call OpenAI Responses API.

Extract ai.output_text.

Run regex-based detectors to set isGroomingAttempt.

Return JSON { reply: { content, isGroomingAttempt } }.

If an exception or missing key, return a safe fallback response (always JSON with HTTP 200 so the client can render a user-friendly message).

Red-flag detector (rule-based)

I implemented compact, auditable patterns such as:

secret|keep this between us|don't tell

send (a )?(pic|selfie|photo|video) meet (up|alone)|hang out just us platform mentions (Snap, Discord, Telegram, Kakao, WhatsApp)

PII probes: where do you live|what school

financial inducements: i'll pay|send you money

Testing & Evaluation

Unit tests: Regex detector tests with positive/negative cases to avoid false positives on benign language.

Integration tests: Local netlify dev runs connecting frontend → functions → mock or real Responses API.

Usability pilots: Short school pilot sessions measuring: Recognition rate of simulated grooming messages, Quiz accuracy change pre/post interaction, Qualitative feedback on clarity of warnings.

Monitoring: Netlify Functions logs for runtime errors; plan to add Sentry/Logflare for production error aggregation.

bottom of page